Learn how I built a macOS screen capture to Notion workflow using AI, environment variables, and automation to eliminate friction and reduce steps.

The author created a local voice transcription and synthesis system, eliminating reliance on cloud services, prioritizing privacy, and enabling seamless conversion between text and audio while maintaining control over their data.

A Game-Changer for Articulating Visual and Functional Needs

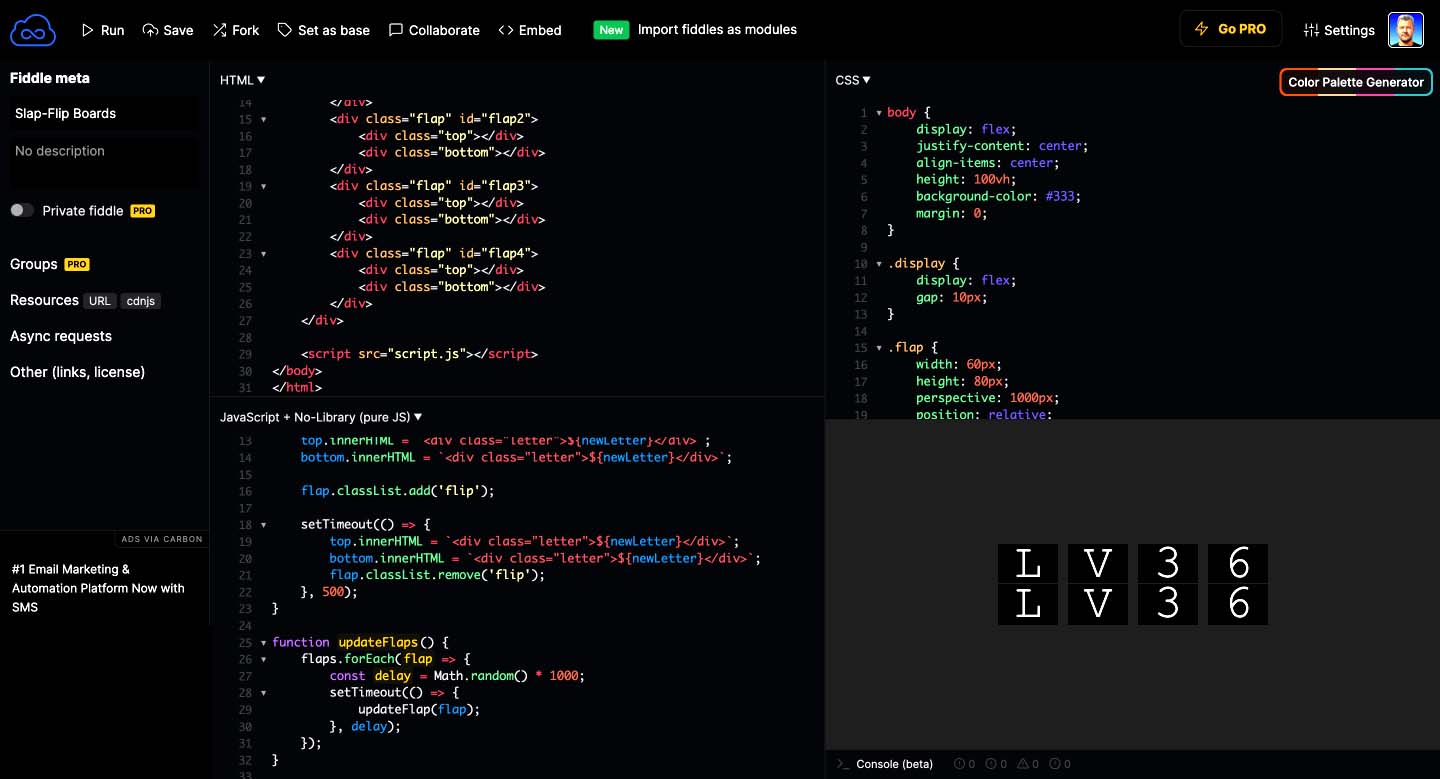

As an old-school UX and UI designer, incorporating AI into my workflow was a no-brainer, and it has transformed how I approach design and development. With AI, I can precisely frame my visual and functional requirements, allowing me to identify potential design and engineering issues before presenting them to developers. This is a far more efficient use of our limited time.

Read moreI was interviewed by an AI tuned for UX design about my recent web design overhaul and here's what AI had to say.

© BERT : MCMXCV — MMXXVI